OCLC Declare OCLC Control Numbers Public Domain

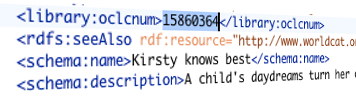

Little things mean a lot. Little things that are misunderstood often mean a lot more. Take the OCLC Control Number, often known as the OCN, for instance. Every time an OCLC bibliographic record is created in WorldCat it is given a unique number from a sequential set – a process that has already taken place over a billion times. The individual number can be found represented in the record it is associated with. Over time these numbers have become a useful part of the processing of not only OCLC and its member libraries but, as a unique identifier proliferated across …