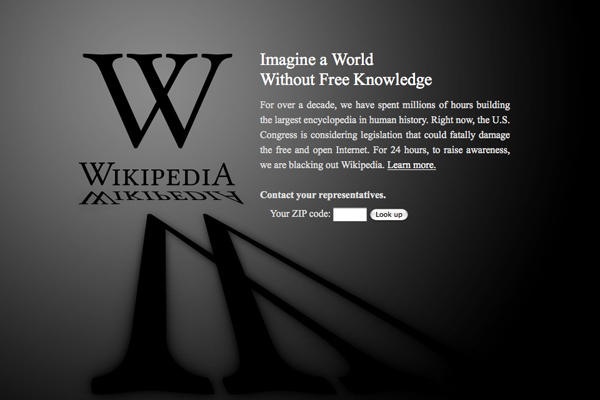

OK So Who Noticed the SOPA Blackout

All in all, I believe the campaign has been surprisingly effective on the visible web. However, what prompted this post was trying to ascertain how effective it was on the Data Web, which almost by definition is the invisible web. Ahead of the dark day, a move started on the Semantic Web and Linked Open Data mailing lists to replicate what Wikipedia was doing by going dark on Dbpedia