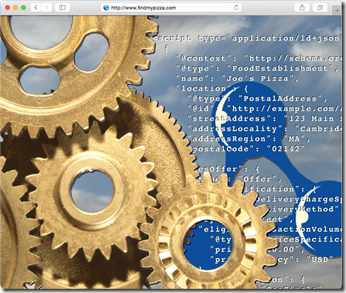

Typical! Since joining OCLC as Technology Evangelist, I have been preparing myself to be one of the first to blog about the release of linked data describing the hundreds of millions of bibliographic items in WorldCat.org. So where am I when the press release hits the net? 35,000 feet above the North Atlantic heading for LAX, that’s where – life just isn’t fair. By the time I am checked in to my Anahiem hotel, ready for the ALA Conference, this will be old news. Nevertheless it is significant news, significant in many ways. OCLC have been at the leading edge …