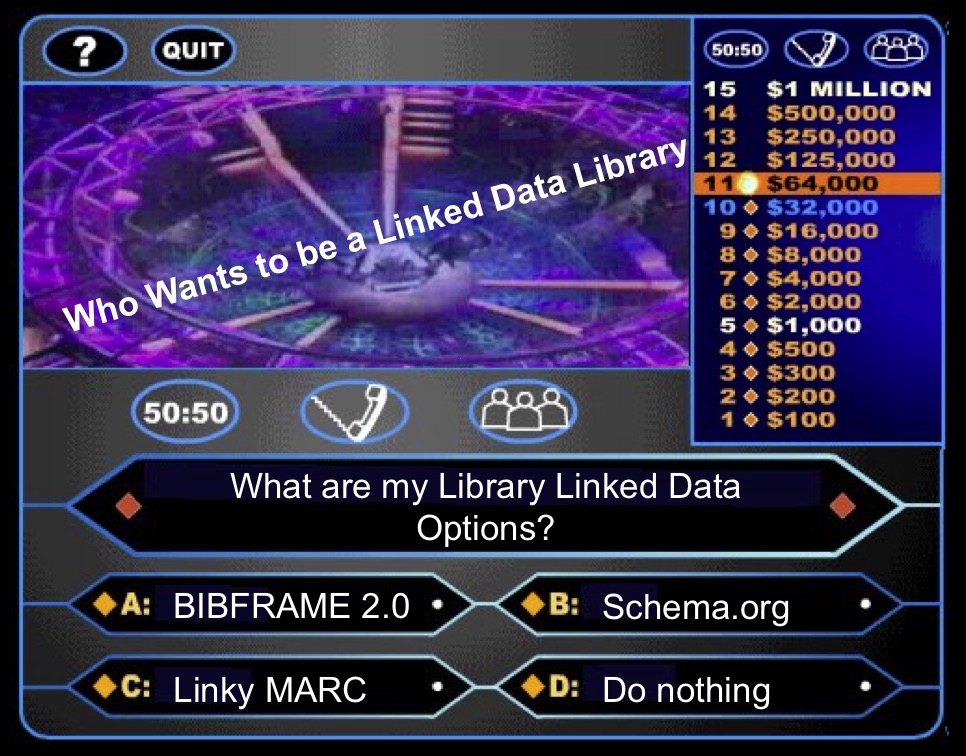

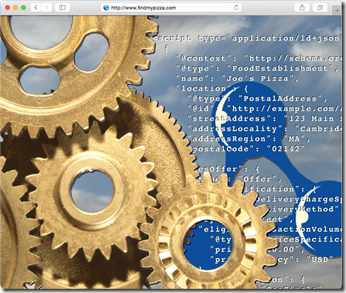

From MARC to BIBFRAME and Schema.org in a Knowledge Graph

The MARC ingestion pipeline is one of four pipelines that keep the Knowledge Graph, underpinning the LDMS, synchronised with additions, updates, and deletions from the many source systems that NLB curate and host.