Bibliographic Records in a Knowledge Graph of Shared Systems

My recent presentations at SWIB23 (YouTube), and the Bibframe Workshop in Europe, attracted many questions; regarding the how, what, and most importantly the why, of the unique cloud-based Linked Data Management & Discovery System (LDMS) we developed, in partnership with metaphacts and Kewmann, in a two year project for the National Library Board of Singapore (NLB). Several of the answers were technical in nature. However, somewhat surprisingly they were mostly grounded in what could best be described as business needs, such as: Let me explore those a little… ✤ Seamless Knowledge Graph integration of records stored, and managed in separate …

Read More ...From MARC to BIBFRAME and Schema.org in a Knowledge Graph

The MARC ingestion pipeline is one of four pipelines that keep the Knowledge Graph, underpinning the LDMS, synchronised with additions, updates, and deletions from the many source systems that NLB curate and host.

Read More ...Library Metadata Evolution: The Final Mile

When Schema.org arrived on the scene I thought we might have arrived at the point where library metadata could finally blossom; adding value outside of library systems to help library curated resources become first class citizens, and hence results, in the global web we all inhabit. But as yet it has not happened.

Read More ...Something For Archives in Schema.org

The recent release of the Schema.org vocabulary (version 3.5) includes new types and properties, proposed by the W3C Schema Architypes Community Group, specifically target at facilitating the web sharing of archives data to aid discovery. When the Group, which I have the privilege to chair, approached the challenge of building a proposal to make Schema.org useful for archives, it was identified that the vocabulary could be already used to describe the things & collections that you find in archives. What was missing was the ability to identify the archive holding organisation, and the fact that an item is being held …

Read More ...Bibframe – Schema.org – Chocolate Teapots

In a session at the IFLA WLIC in Kuala Lumpur – my core theme being that there is a need to use two [linked data] vocabularies when describing library resources — Bibframe for cataloguing and [linked] metadata interchange — Schema.org for sharing on the web for discovery.

Read More ...Schema.org Introduces Defined Terms

Do you have a list of terms relevant to your data?

Things such as subjects, topics, job titles, a glossary or dictionary of terms, blog post categories, ‘official names’ for things/people/organisations, material types, forms of technology, etc.

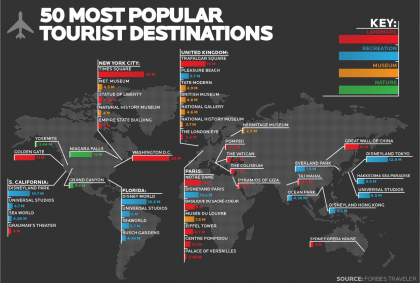

Read More ...Schema.org Significant Updates for Tourism and Trips

The latest release of Schema.org (3.4) includes some significant enhancements for those interested in marking up tourism, and trips in general.

For tourism markup two new types TouristDestination and TouristTrip have joined the already useful TouristAttraction

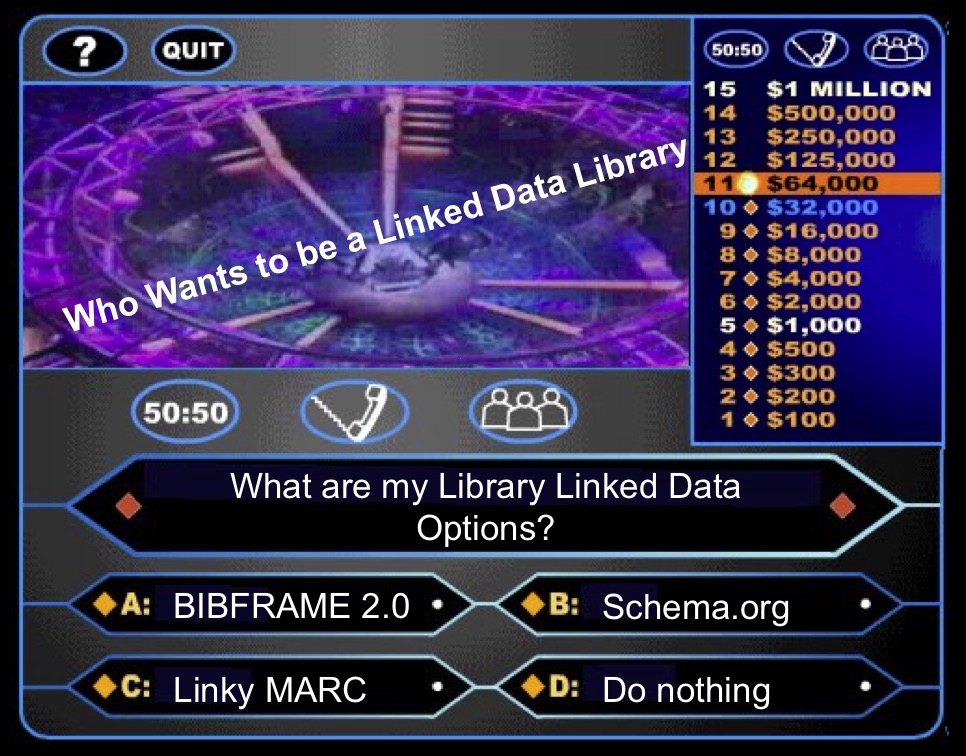

Read More ...The Three Linked Data Choices for Libraries

We are [finally] on the cusp of establishing a de facto Linked Data approach for libraries and their system suppliers – not there yet but getting there.

We have a choice between BIBFRAME 2.0, Schema.org, Linky MARC and doing nothing.

Read More ...Structured Data: Helping Google Understand Your Site

Add Schema.org structured data to your pages because during indexing, we will be able to better understand what your site is about.

Read More ...Schema.org for Tourism

These TouristAttraction enhancements have significantly improved the capability for describing Tourist Attractions and hopefully enabling more tourist discoveries

Read More ...Schema.org: Describing Global Corporations Local Cafés And Everything In-between

There have been discussions in Schema.org about the way Organizations their offices, branches and other locations can be marked up; they exposed a lack of clarity in the way to structure descriptions of Organizations and their locations, offices, branches , etc.

To address that lack of clarity I thought it would be useful to share some examples here.

A Discovery Opportunity for Archives?

So why am I now suggesting that there maybe an opportunity for the discovery of archives and their resources?

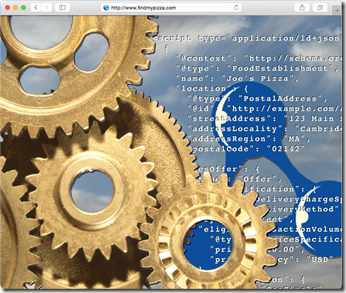

Read More ...Testing Schema.org output formats

Part of my efforts working with Google in support of the Schema.org structured web data vocabulary, its extensions, usage and implementation, is to introduce new functionality and facilities on to the Schema.org site.

I have recently concluded a piece of work to improve accessibility to the underlying definition of vocabulary terms in various data formats, which is now available for testing and comment.

Read More ...Hidden Gems in the new Schema.org 3.1 Release

I spend a significant amount of time working on the supporting software, vocabulary contents, and application of Schema.org. So it is with great pleasure, and a certain amount of relief, I share the release of Schema.org 3.1 and share some hidden gems you find in there.

Read More ...A Fundamental Component of a New Web?

Let me explain what is this fundamental component of what I am seeing potentially as a New Web, and what I mean by New Web.

This fundamental component I am talking about you might be surprised to learn is a vocabulary – Schema.org.

Read More ...Evolving Schema.org in Practice Pt3: Choosing Where to Extend

OK. You have read the previous posts in this series. You have said to yourself I only wish that I could describe [insert you favourite issue here] in Schema.org. You are now inspired to do something about it, or get together with a community of colleagues to address the usefulness of Schema.org for your area of interest. Then comes the inevitable question: Where do I focus my efforts – the core vocabulary or a Hosted Extension or an External Extension?

Read More ...Evolving Schema.org in Practice Pt2: Working Within the Vocabulary

Having covered the working environment; I now intend to describe some of the important files that make up Schema.org and how you can work with them to create or update, examples and term definitions within your local forked version.

Read More ...Evolving Schema.org in Practice Pt1: The Bits and Pieces

I am often asked by people with ideas for extending or enhancing Schema.org how they go about it. These requests inevitably fall into two categories – either ‘How do I decide upon and organise my new types & properties and relate them to other vocabularies and ontology’ or ‘now I have my proposals, how do I test, share, and submit them to the Schema.org community?’

I touch on both of theses areas in a free webinar I recorded for DCMI/ASIS&T a couple of months ago. It is in the second in a two part series Schema.org in Two Parts: From Use to Extension . The first part covers the history of Schema.org and the development of extensions. That part is based up on my experiences applying and encouraging the use of Schema.org with bibliographic resources, including the set up and work of the Schema Bib Extend W3C Community Group – bibliographically focused but of interest to anyone looking to extend Schema.org.

The focus of this post is in answering the now I have my proposals, how do I test, share, and submit them to the Schema.org community?

Read More ...Schema.org – Extending Benefits

I find myself in New York for the day on my way back from the excellent Smart Data 2015 Conference in San Jose. It’s a long story about red-eye flights and significant weekend savings which I won’t bore you with, but it did result in some great chill-out time in Central Park to reflect on the week.

In its long auspicious history the SemTech, Semantic Tech & Business, and now Smart Data Conference has always attracted a good cross section of the best and brightest in Semantic Web, Linked Data, Web, and associated worlds. This year was no different. In my new role as an independent working with OCLC and at Google.

I was there on behalf of OCLC to review significant developments with Schema.org in general – now with 640 Types (Classes) & 988 properties – used on over 10 Million web sites.

Read More ...Is There Still a Case for Aggregations of Cultural Data

A bit of a profound question – triggered by a guest post on Museums Computer Group by Nick Poole CEO of The Collections Trust about Culture Grid and an overview of recent announcements about it. Broadly the changes are that: The Culture Grid closed to ‘new accessions’ (ie. new collections of metadata) on the 30th April The existing index and API will continue to operate in order to ensure legacy support Museums, galleries, libraries and archives wishing to contribute material to Europeana can still do so via the ‘dark aggregator’, which the Collections Trust will continue to fund Interested parties …

Read More ...