A Step for Schema.org – A Leap for Bib Data on the Web

Several significant bibliographic related proposals were brought together in a package which I take great pleasure in reporting was included in the latest v1.9 release of Schema.org

Check out our new fixed price service to find out how your site is performing!

Several significant bibliographic related proposals were brought together in a package which I take great pleasure in reporting was included in the latest v1.9 release of Schema.org

As is often the way, you start a post without realising that it is part of a series of posts – as with the first in this series. That one – Entification, the following one – Hubs of Authority and this, together map out a journey that I believe the library community is undertaking as it evolves from a record based system of cataloguing items towards embracing distributed open linked data principles to connect users with the resources they seek. Although grounded in much of the theory and practice I promote and engage with, in my role as Technology Evangelist …

As is often the way, you start a post without realising that it is part of a series of posts – as with the first in this series. That one – Entification, and the next in the series – Beacons of Availability, together map out a journey that I believe the library community is undertaking as it evolves from a record based system of cataloguing items towards embracing distributed open linked data principles to connect users with the resources they seek. Although grounded in much of the theory and practice I promote and engage with, in my role as Technology …

The phrase ‘getting library data into a linked data form’ hides multitude of issues. There are some obvious steps such as holding and/or outputting the data in RDF, providing resources with permanent URIs, etc. However, deriving useful library linked data from a source, such as a Marc record, requires far more than giving it a URI and encoding what you know, unchanged, as RDF triples.

Two, on the surface, totally unconnected posts – yet the the same message. Well that’s how they seem to me anyway.

Post 1 – The Problem With Wikidata from Mark Graham writing in the Atlantic. Post 2 – Danbri has moved on – should we follow? by a former colleague Phil Archer.

An ambition for the web is to reflect and assist what we humans do in the real world. Search has only brought us part of the way. By identifying key words in web page text, and links between those pages, it makes a reasonable stab at identifying things that might be related to the keywords we enter.

As I commented recently, Semantic Search messages coming from Google indicate that they are taking significant steps towards the ambition. By harvesting Schema.org described metadata embedded in html

Most Semantic Web and Linked Data enthusiasts will tell you that Linked Data is not rocket science, and it is not. They will tell you that RDF is one of the simplest data forms for describing things, and they are right. They will tell you that adopting Linked Data makes merging disparate datasets much easier to do, and it does. They will say that publishing persistent globally addressable URIs (identifiers) for your things and concepts will make it easier for others to reference and share them, it will. They will tell you that it will enable you to add value …

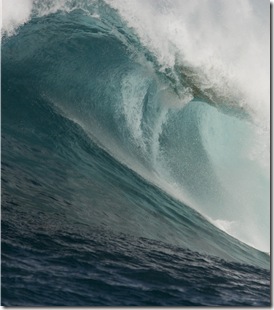

I believe Data, or more precisely changes in how we create, consume, and interact with data, has the potential to deliver a seventh wave impact. With the advent of many data associated advances, variously labelled Big Data, Social Networking, Open Data, Cloud Services, Linked Data, Microformats, Microdata, Semantic Web, Enterprise Data, it is now venturing beyond those closed systems into the wider world. It is precisely because these trends have been around for a while, and are starting to mature and influence each other, that they are building to form something really significant.

The Open Knowledge Foundation in association with the Peer 2 Peer University have announced plans to launch a School of Data, to increase the skill base for Data Wranglers, and scientists. This is welcome, not just because they are doing it but also, because of who they are and the direction they are taking.